System Design 1

What is system Design?

Section titled “What is system Design?”System design is the process of defining a system’s architecture, components, modules, interfaces, and data to meet specific requirements, essentially creating a detailed blueprint for building software or hardware systems that are efficient, scalable, and reliable

It’s the crucial step between understanding user needs (requirements analysis) and writing code (implementation), focusing on high-level structure, interactions, and non-functional aspects like performance, security, and maintainability, rather than the code itself

How to approach system design?

Section titled “How to approach system design?”System Design is extremely practical and there is a structured way to tackle the situations.

- Understand the problem statement

- Break it down into components/features (essential). e,g, Social media Feed, Notification

- Dissect each component into sub component (if required). e.g. Aggregator and Recommendation generator in Feed.

- Now for each sub component look into

- Database and Caching

- Scaling & Fault Tolerance

- Async processing (Delegation)

- Communication

How do you know that you have built a good system?

Section titled “How do you know that you have built a good system?”Every system is “infinitely” buildable and hence knowing when to stop the evolution is important.

- You broke your system into components

- Every component has a clear set of responsibilities (Mutually Exclusive)

- For each component, you’ve slight technical details figured out

- Database and Caching

- Scaling & Fault Tolerance

- Async processing (Delegation)

- Communication

- Each component (in isolation) is

- scalable → horizontally scalable

- fault tolerant → plan for recovery in case of a failure

- Data and the server it self should auto recover

- available → component functions even when some component “fails”

Relational Databases

Section titled “Relational Databases”Databases are most critical component of any system. They make or break a system.

Data is stored & represented in rows and columns. You pick relational databases far relations and acid.

Properties A - Atomicity C - Consistency I - Isolation D - Durability

A - Atomicity

Section titled “A - Atomicity”All statements within a transaction takes effect or none of them take effect.

C - Consistency

Section titled “C - Consistency”All data in the database should conform to the rules, no constraints are violated using constraints, cascades, triggers Foreign key checks do not allow you to delete parent if child exists (configurable though) e.g. Cascade

I -Isolation

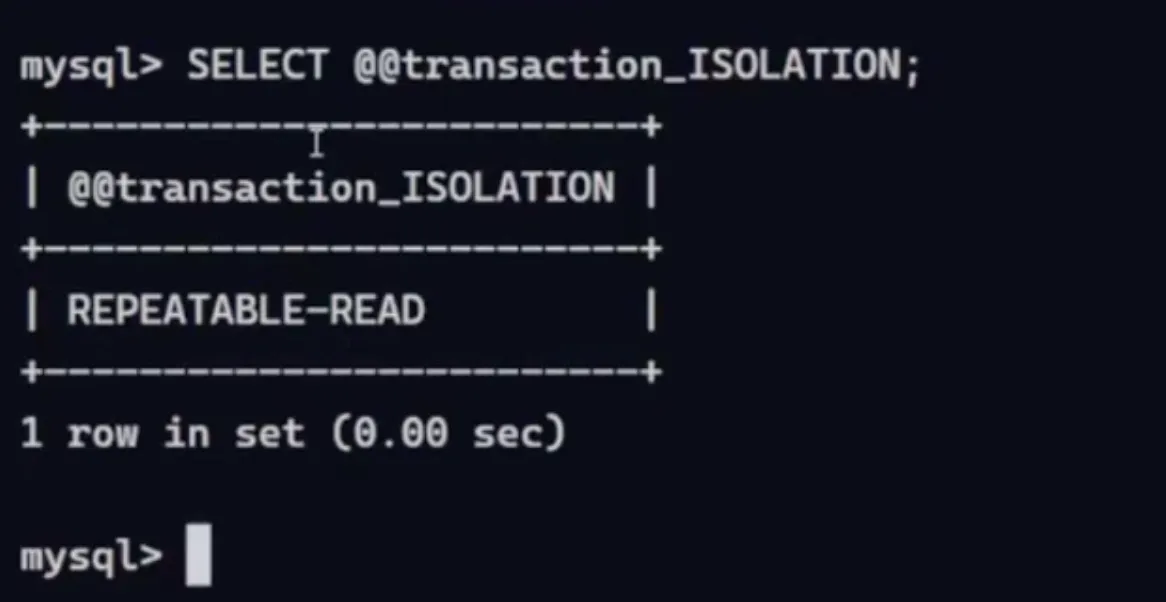

Section titled “I -Isolation”Concurrent transactions should not interfere with each other. This is achieved through various levels of isolation (Read uncommitted, Read committed, Repeatable read, Serialisable) depending on the needs of the application.

D - Durability

Section titled “D - Durability”when transaction commits, the changes outlives outages.

Database Isolation Levels

Section titled “Database Isolation Levels”Database isolation levels refer to the degree to which a transaction must be isolated from the data modifications made by any other transactions.

Isolation levels dictate how much one transaction knows about the other

There are four isolation levels defined by the SQL standard. Each level defines the degree to which one transaction must be isolated from the resource modifications made by other transactions.

Read uncommitted

Section titled “Read uncommitted”This is the lowest isolation level, and it allows a transaction to read data changes that have not yet been committed by other transactions. This can lead to “dirty reads,” where a transaction reads data that is later rolled back.

e.g. Transaction A reads data modified by transaction B before B commits.

Read committed

Section titled “Read committed”This level guarantees that a transaction will only read data that has been committed. It prevents dirty reads but allows for non-repeatable reads, where the same query can return different results at different times.

e.g. Transaction A reads data committed by transaction B.

Repeatable read (Default)

Section titled “Repeatable read (Default)”This level ensures that all reads within a transaction see the same data. However, it can still lead to phantom reads, where new rows are added or removed during a transaction.

e.g. Transaction A reads data that was visible at the start of transaction A, even if transaction B has committed changes in between.

What is Phantom read?

Section titled “What is Phantom read?”Phantom reads occur when one transaction reads a set of rows and then another transaction inserts or deletes rows that match the search criteria of the first transaction.

This causes the first transaction to see rows that did not exist when it started, leading to inconsistent results.

Serializable

Section titled “Serializable”This is the highest isolation level. It guarantees that transactions are isolated from each other. In this level, transactions are executed serially, preventing dirty reads, non-repeatable reads, and phantom reads.

This will reduce the throughput of the system and things can be a lot slower

e.g. Transaction A is processed as if no other transactions were running concurrently, Even if they are running it will wait until the rollback or commit of it.

Scaling Databases

Section titled “Scaling Databases”- These techniques are applicable to most databases out there relational and non- relational

Vertical Scaling

Section titled “Vertical Scaling”- add more CPU, RAM, Disk to the database

- requires downtime during reboot

- gives you ability to handle “scale”, more load

Horizontal Scaling

Section titled “Horizontal Scaling”Read Replicas

Section titled “Read Replicas”- when read: write is 90:10

- You move reads to other database so that “master” is free to do writes

- API Servers should know which DB to connect to get things done. So that it will connect two connections. One with Primary for write and another with replica for read.

Replication

Section titled “Replication”Changes on one database (Master) needs to be sent to Replica to maintain consistency

Synchronous Replication

Section titled “Synchronous Replication”The main database waits for changes to be written to the replica before acknowledging the commit. Offers higher consistency but can impact performance.

Asynchronous Replication

Section titled “Asynchronous Replication”The main database does not wait for changes to be written to the replica before acknowledging the commit. Offers higher performance but can potentially lead to data inconsistency.

- In this Primary DB can send the data to be committed by replica

- or replica periodically polls the data from master both way it can be implemented.

- All the DB providers have this functionality just need to configure it.

Sharding

Section titled “Sharding”What if we get huge amount of write in that case sharding helps

- Partitioning data across multiple databases.

- Because one node cannot handle the data load we split it into multiple exclusive subsets writes on a particular row/document will go to one particular shard.

- API server needs to know whom to connect to, to get things done.

- Note: some databases has a proxy that takes care of routing

- Each shard can have its own replica (if needed)

Advantages of sharding

- Handle large Reads and Writes

- Increase overall storage capacity

- Higher availability

Disadvantages of sharding

- operationally complex

- cross-shard queries expensive

Sharding : Method of distributing data across multiple machines

Partioning: splitting a subset of data within the same instance or multiple.

Overall, a database is sharded while the data is partitioned (split across).

Diagram showing a 100 GB dataset split into 3 partitions distributed across 2 shards (40, 40, 20 GB):

Partioning

Section titled “Partioning”- Horizontal Partitioning (most common)

- Some of the table rows put in one DB and other rows in other DB

- Vertical Partitioning

- these 3 tables in DB1 and these 4 tables in another DB.2

- It is like moving from monolith to microservice

deciding which one to pick depends on load, use case, and access pattern.

Non - Relational DB

Section titled “Non - Relational DB”It is a very broad generalisation of databases that are non-relational (MysqL, PostgresqL, etc) But this does not mean all non-relational databases are similar.

Most non-relational databases shard out-of-the box.

Important DB types

Section titled “Important DB types”Document DB

Section titled “Document DB”- MongoDB, Elasticsearch.

- Mostly JSON based

- Support complex queries

- Partial updates to document is possible.

- Like no need to get whole record and edit and put it back

Key Value Stores

Section titled “Key Value Stores”- (Redis, DynamoDB, Acrospike)

- Extremely simple databases | Limitied functionalities

- GET (K)

- PUT (K,v)

- DEL (K)

- Does not support complex queries (aggregations)

- Can be heavily sharded and partitioned

- Use case: profile data, order data, auth data.

Graph DB

Section titled “Graph DB”- (Neo4j, Neptune, Dgraph)

- What if our graph data structure had a database

- it stores data that are represented as nodes, edges, and relations

- Great for running complex graph algorithms

- Powerful to model Social Networks, Recommendations & Fraud Detection

How to pick right database?

Section titled “How to pick right database?”Common Misconception: Picking Non-relational DB because relational Databases do not scale.

Why non-relational DBs scale?

- There are no relations and constraints

- Data is modelled to be sharded

if we relax the above on relational DB, we can scale

- do not use Foreign Key check

- do not use cross shard transaction

- do manual sharding

Does this mean, no OB is different? No!! every single database has some peculiar properties and guarantees and if you need those, you pick that DB

How does this help in designing system?

Section titled “How does this help in designing system?”While designing any system, do not jump to a particular DB right away

- understand what data you are storing

- understand how much of data you will be storing

- understand how you will be accessing the data

- What kind of queries you will be firing

- any special feature you expect eg: Expiration

Caching

Section titled “Caching”Caches are anything that helps you avoid an expensive network I/o, disk I/o, or cpu computation

- API call to get profile information

- Reading a specific line from a file

- doing multiple table joins

Store frequently accessed data in a temporary storage location to improve performance.

Caching is nothing but a glorified HashMap.

Caching is technique and caches are the places where we store the data.

e.g. Redis (v popular), Mem cached.

Use case

- In my system something that is recently published, will be more accessed e.g. Tweet, YT video, Blog, Recent News, so let me cache it.

- I notices my recommendation system started pushing videos 4 years ago for this channel let me cache it

- Auth Tokens: Authentication are cached in “cache” to avoid load on database when tokens are checked on every requests.

- Live Stream: Last 10 min of Live Stream is cached on CDN, as it will be accessed the most.

Populate Cache

Section titled “Populate Cache”- Mostly logically cache sits between API server and Database

Lazy Population

Section titled “Lazy Population”Populate the cache on demand when data is first requested, or when the cache is accessed, and the data is not present. This is also called “cache aside”.

If the data is requested and not found in the cache, the API server fetches the data from the database, caches it, and then returns it to the client. This approach is simple to implement. The main downside is that the first request for a piece of data will always be slow because it requires a database lookup.

e.g. Caching Blogs

Eager Population

Section titled “Eager Population”Populate the cache proactively, either at the time of data creation or updates, or through a background process that periodically refreshes the cache.

It means pre-loading the caches with the data even before the clients requesting for it. This approach ensures that the data is always available in the cache, resulting in fast reads.

However, it requires more resources, and data in the cache can become stale if not regularly updated.

e.g.

- Some popular person with 1M follower has tweeted, let’s cache all the tweets of this popular person.

- People watching cricket, serve match score from cache

Scaling Technique of Cache

Section titled “Scaling Technique of Cache”- All the things of System Design 1#Scaling Databases applies here as well.

Caching at Different Level

Section titled “Caching at Different Level”- Client Side: Cache is the browser

- e.g. Browser caching - saving static assets like images, CSS, JS files

- Application Side: Inside your code/API server itself

- e.g. Java, Python: using hash maps to cache the results of frequently called functions, or even the whole result of a API call.

- CDN Side: CDN (Content Delivery Network). This is CDN caching.

- e.g. Caching static assets like images, videos, etc.

- Database Side: Cache the result of a query, so that subsequent calls to the same queries does not hit the database.

- e.g. Query caching can be used in databases.

- e.g. Caching indexes, or the results of the queries themselves.

- Precomputed columns Instead of computing total posts by users everytime we store ‘total-posts’ as column and update it once in a while. (saves an expensive DB computation)

Select count (*) from posts where user-id = 123this is an expensive query.- Network Side: Using a proxy cache

- e.g Reverse proxy caching to cache HTTP responses

- Caching on Load Balancer

- Remote /Distributed Cache e.g. Redis

- API server uses it to store frequently served data

Async Processing

Section titled “Async Processing”- Spinning a virtual machine (EC2) can be done asynchronusly. Cause if we wait for 5 mins till it spins up and http request respond me back after that is not good experience.

- Typically long running tasks will be done in async processing.

Message Queues / Brokers

Section titled “Message Queues / Brokers”Brokers help two services applications communicate through messages. We use message brokers whe we want to do something asynchronously.

- Long- running task

- Trigger dependent tasks across machines

Example: Video processing

SQS - Simple Queue Service by Amazon. RabbitMQ - is an open-source message broker.

Features

Section titled “Features”- Brokers help us connect diferent sub-systems

- Brokers act as a buffer for the messages/tasks

- i.e. Service can add as many things as they want to delegate to system, it will put it in queue and worker will pick it from there, when they are free.

- Brokers can retain messages far ‘n’ days. It is configurable though.

- SQS offers 14 days of maximum days, after that it will expire

- Brokers can re-queue the message if not deleted

- Let say you picked a email sending job from queue, while processing it crashed, and it failed to delete the job from queue. Now after some time queue we automatically reque it. - It’s Visibility Time out on SQS

SQS Core API Actions

Section titled “SQS Core API Actions”These actions facilitate the core message lifecycle: sending, receiving, and deleting messages.

CreateQueue: This action is used to create a new message queue. You can specify parameters likeQueueName, choose between a Standard or FIFO (First-In-First-Out) queue typeSendMessage: This is how producer components introduce messages into the queue. A developer provides the queue’s URL and the message body. In FIFO queues, this action might also involve specifying aMessageDeduplicationIdfor exactly-once processing guarantees.ReceiveMessage: Consumer components call this action to retrieve messages from the queue. When a message is received, it isn’t immediately deleted; instead, it becomes temporarily invisible to other consumers for aVisibilityTimeoutperiod, allowing the current consumer time to process it.DeleteMessage: After a consumer successfully processes a message, it must call this action using the uniqueReceiptHandleprovided during theReceiveMessagecall. This is the crucial step that prevents the message from being processed again when the visibility timeout expires.

RabbitMQ in 100 Seconds by Fireship

Message Streams

Section titled “Message Streams”Apache kafka - Most popular AWS Kinesis is another example

- Similar to message queues, but designed for high-throughput, real-time data streaming to multiple different clients

Apache Kafka allows us to organize data into topics, with each topic having multiple partitions for parallel read/write operations. Consumers read data from these topics, and multiple consumer groups can independently process the same data streams.

The User Service publishes “User Created” events to a Kafka topic. Both the Email Service and Analytics Service subscribe to this topic, enabling real-time email campaigns and analytics updates.

Difference between Kafka vs SQS

Section titled “Difference between Kafka vs SQS”- In SQS (Message Broker) - While multiple consumers can poll the queue, any given message is intended to be processed by only one receiver at a time i.e *Single-Message Processing once

- But in case of Apache Kafka - Multiple consumer groups can independently process the same data streams i.e single-message processing by multiple different services.

- SQS is more suited for task queue